ML for Early Heart Failure Detection

Role: ML Engineer | Tools: Python (NumPy, Pandas, Matplotlib), scikit-learn (for metrics/plots only) | Data: Kaggle (Heart Failure Prediction)

Feature importance from Logistic Regression model, highlighting ECG slope, chest pain type, sex, and exercise-induced angina as top predictors.

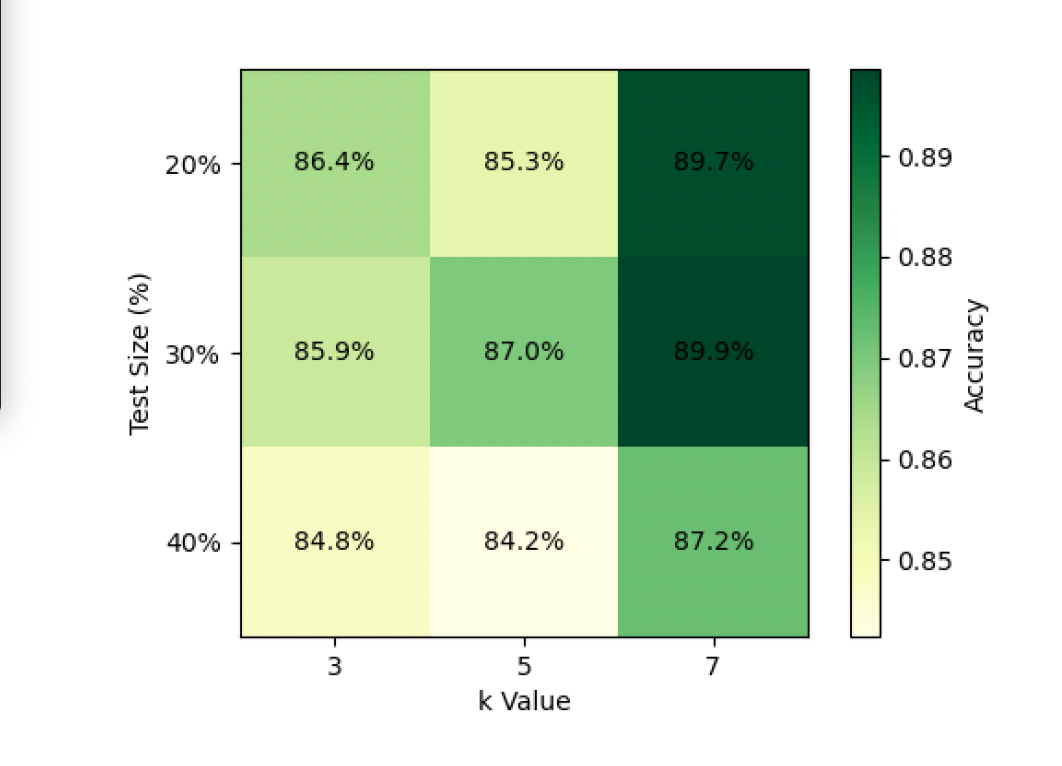

Heatmap of kNN accuracy by k-value and train/test split: highest accuracy (89.86%) achieved at k=7 with a 70/30 split.

Quick Links: GitHub | Report (PDF)

Executive Summary

Collaborated with a partner to build and compare four supervised learning models (k-Nearest Neighbors, Logistic Regression, Decision Tree, and Random Forest) to predict heart failure risk from routine patient attributes. I implemented kNN and Logistic Regression from scratch, while my partner implemented the tree-based models. We translated our findings into actionable recommendations for early-risk identification and care prioritization.

Business Problem

Physicians, payers, and patients need reliable, interpretable signals to identify high-risk patients early. We asked:

Which model predicts risk most accurately?

Which features most strongly indicate risk?

How much better are ML models than a simple heuristic baseline?

Data

Observations: 918 patients

Features (11): age, sex, chest pain type, resting BP, cholesterol, fasting blood sugar, resting ECG, max heart rate, exercise-induced angina, ST depression (“oldpeak”), and ECG slope.

Target: heart failure event (binary)

Approach

My contributions:

Implemented from scratch: kNN (Euclidean distance; k ∈ {3,5,7}); Logistic Regression (sigmoid, gradient descent, ridge regularization with C ∈ {0.01, 0.1, 1})

Heuristic baseline creation and evaluation

Feature weight inspection for interpretability

Partner’s contributions: Decision Tree and Random Forest implementation for comparison

Evaluation metrics: Accuracy, Precision/Recall/F1, ROC AUC

Results

Best performing model: kNN, 70/30 split, 89.86% accuracy.

kNN: Accuracy 89.86%, Precision 93%, Recall 90%, F1 91%

Logistic Regression: Accuracy 88.04%, ROC AUC 0.945, Precision 92%, Recall 88%, F1 90%

Heuristic baseline: Accuracy 56.97%, so performs significantly worse

Tree models: Random Forest outperformed Decision Tree as expected, but trailed kNN overall.

Insights & Recommendations

Top signals: ECG slope, chest pain type, sex, and exercise-induced angina were the strongest predictors.

Clinical takeaway: Use the model to flag high-risk patients for additional screening and resource prioritization.

Operational: Start with an interpretable model (Logistic Regression) to build clinician trust, then augment with kNN for higher raw accuracy

Impact

Demonstrated that ML materially outperforms simple rules on this problem (≈33 accuracy points above heuristic).

Provided a framework to balance accuracy vs. interpretability—key for clinical adoption and stakeholder buy-in.